Reactionary Feminist

Feminism Against Progress

“Some of the sharpest cultural observations on the internet.”

N.S. Lyons, The Upheaval

By registering you agree to Substack's Terms of Service, our Privacy Policy, and our Information Collection Notice

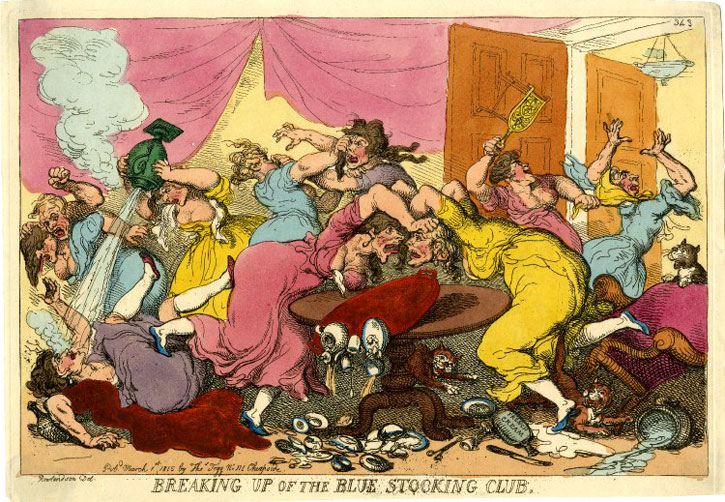

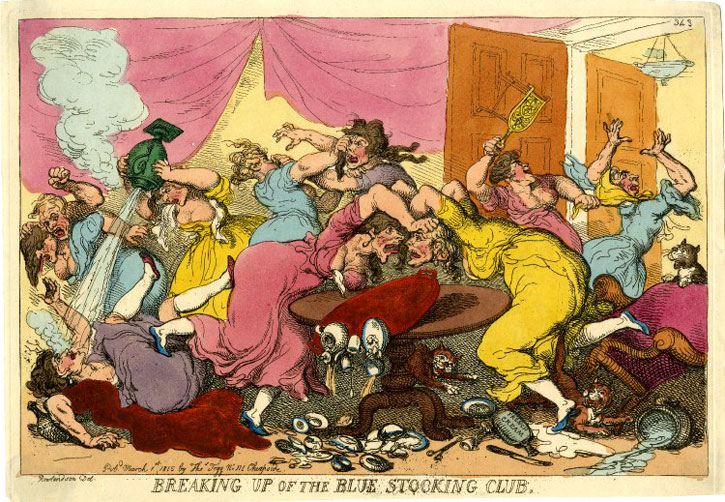

Feminism Against Progress